Design System Benchmarking at Scale

Solo UX Researcher | 13 weeks | Quantitative + Systems Research

TL;DR: Built the first longitudinal benchmark for a multi-team design system, enabling leadership to track adoption, confidence, and usability over time, and prioritize roadmap decisions with evidence.

WHAT THIS CASE DEMONSTRATES

Designing a repeatable, decision-ready research system in a complex, multi-stakeholder environment, and using it to guide roadmap, adoption, and governance decisions over time.

THE DECISION GAP

Viasat’s design system, Beam, had been in use for over three years and was rapidly scaling across teams. While adoption was growing, leaders lacked reliable, longitudinal evidence to answer critical questions:

? Is Beam improving usability and confidence across roles?

? Where is the system enabling velocity — and where is it creating friction?

? How should the team prioritize investments ahead of major releases?

One-off feedback existed, but there was no standardized way to measure experience, compare releases, or guide decisions over time.

My role: Design and execute the first-ever longitudinal measurement system for Beam — one that could scale, reflect different stakeholder realities, and be reused across future releases.

RESEARCH CONSTRAINTS

This work needed to operate within real organizational constraints:

RESEARCH STRATEGY

I designed a reusable longitudinal benchmark that balanced rigor, relevance, and scalability across releases. The study:

Covered all major interaction points (onboarding, usage, support, feedback).

Used role-based logic to ensure relevance without fragmenting metrics.

Prioritized signals that could be tracked release-over-release.

The goal was not just to understand what was happening now, but to create a measurement system the team could trust over time.

Scale

300+ users across designers, developers, PMs, and product owners.

Repeatability

Metrics had to remain comparable across releases, not capture one-time insights.

Depth

Metrics had to remain comparable across releases, not capture one-time insights.

Relevance

“Success” meant differet things to different roles.

SELECTED RESEARCH ARTIFACTS

To ensure the study was both rigorous and reusable, I created a small set of foundational artifacts:

Longitudinal Survey Framework

A role-based Qualtrics structure with conditional routing to ensure relevance while preserving year-over-year comparability.

Metric Definition Sheet

Clear definitions for adoption, confidence, usability, and engagement to prevent metric drift across releases.

Pilot & Iteration Notes

Iterative feedback cycles with senior researchers to validate neutrality, clarity, and longitudinal validity.

Stakeholder Interaction Map

Mapped how designers, developers, PMs, and product owners interact with Beam to define role-specific success criteria and survey logic.

Mapping how different roles engage with the design system to define role-specific success metrics and ensure the benchmark reflected real decision contexts)

KEY JUDGEMENT CALLS

Prioritized comparability over customization

I intentionally avoided overly tailored questions — even when requested — to preserve clean longitudinal comparisons across releases.

Tradeoff: Slightly less personalization in exchange for stronger trend analysis and higher decision confidence.

Designed for stakeholder trust, not just data collection

Each role experienced Beam differently, so success metrics were aligned to how decisions are actually made, not just how the system is used.

Tradeoff: More upfront design effort, but stronger buy-in and long-term reuse.

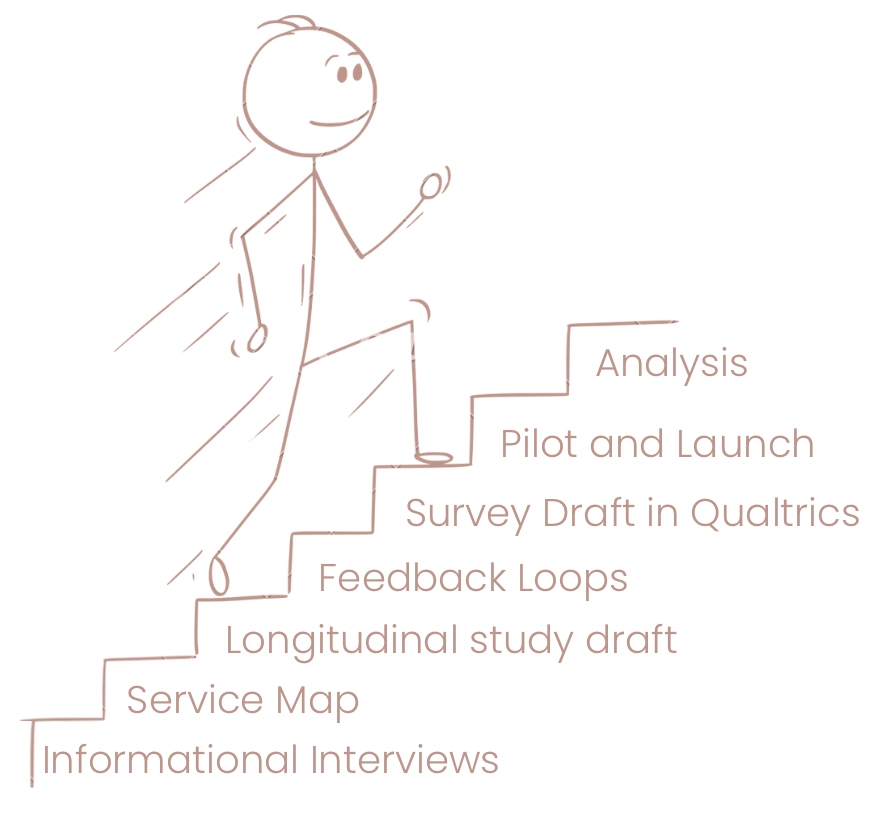

EXECUTION OVERVIEW

1.

2.

3.

4.

Stakeholder Interviews clarified interaction points, expectations, and definitions of success across roles.).

Service Mapping helped visualize how Beam supports onboarding, daily use, support, and feedback loops.

Survey Design on Qualtrics used custom routing logic and multiple pilots helped to reduce ambiguity and fatigue.

Launch & Distribution was via email and Slack, aligning with existing Beam support channels.

KEY FINDINGS (AND WHY THEY MATTERED)

The survey closed with 49 responses across designers, developers, PMs, and product owners, prioritizing actionable input ahead of the next major release.

What worked

High confidence and satisfaction across all roles.

Onboarding experience rated consistently strong.

What did not

Developers were unaware of existing onboarding documentation.

Office hours and feedback mechanisms were underutilized.

Beam updates were rarely prioritized in sprint planning without explicit guidance.

These insights shifted the conversation from “Is Beam good?” to “Where should we invest next?”

IMPACT

Improved 3 of 5 core KPIs related to adoption, engagement, and usage confidence.

Established the first repeatable benchmarking framework for the design system.

Enabled the Beam team to track experience trends across releases and make evidence-based roadmap decisions.

WHY THIS MATTERS

This work helped the team move from reactive feedback to proactive system stewardship, treating the design system as a product with measurable outcomes, not just a shared resource.

WHAT I’LL CARRY FORWARD

‘UX Research is a little bit of science and a lot of art’

I designed a reusable longitudinal benchmark that balanced rigor, relevance, and scalability across releases. The study:

Designing research systems is as much about governance and trust as it is about methods.

Longitudinal studies require restraint — what you don’t measure matters as much as what you do.

Strong research often means saying no to attractive but short-term insights.